During Tuesday's keynote at the I/O developer conference, Google unveiled new capabilities for its Lens visual search engine and expanded the availability of the platform in smartphone camera apps.

Introduced at last year's edition of the conference, Google Lens launched as an exclusive feature for Google Pixel handsets before expanding to Google Photos and Google Assistant.

Now, Lens will be available via the camera app in Google Pixel and LG G7 starting next week, according to Aparna Chennapragada, vice president of product for AR, VR, and vision-based products at Google, who delivered the Google Lens portion of the keynote presentation.

Supported devices from LG Electronics, Motorola, Xiaomi, Sony Mobile, HMD/Nokia, Transsion, TCL, OnePlus, BQ, and Asus will also integrate Lens into their camera apps.

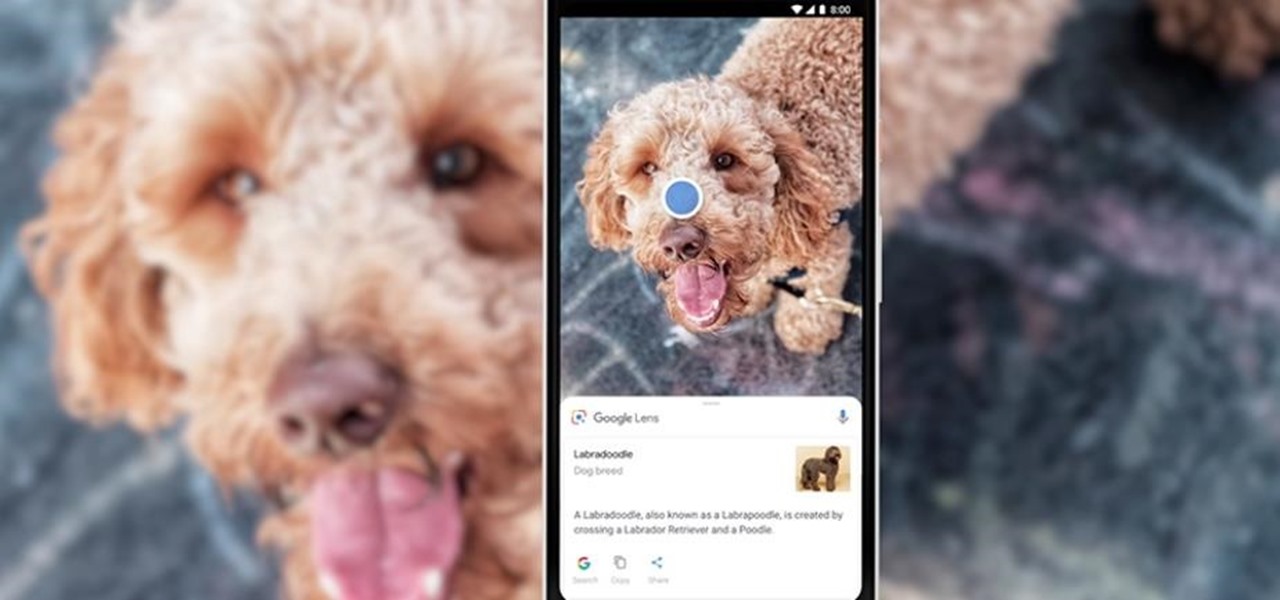

While Lens is already helpful for identifying dog breeds and buildings, among other things, Google has given its capabilities a shot in the arm.

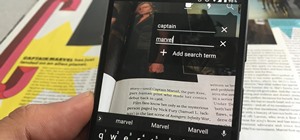

One area of improvement is smart text selection. While Lens can already recognize words in pictures, now it can understand the meaning and context of those words. For instance, it understands that pictures of food and their ingredients would be helpful in connection with the names of menu items at a restaurant.

"With smart text selection, you can now connect the words you see with the answers and actions you need," said Chennapragada, during the keynote presentation. "So you can do things like copy and paste from the real world directly into your phone."

Lens has gained a sense of style, too. Users can view clothing or home decor products with Lens to learn more about those items, as well as find similarly-styled products that match them.

"There's so much information available online, but many of the questions we have are about the world right in front of us," wrote Rajan Patel, director of Google Lens, in a blog post. "That's why we started working on Google Lens, to put the answers right where the questions are, and let you do more with what you see."

Google has also given Google Lens real-time search capabilities. Now, users no longer have to take a snapshot to search for observed items, they can simply point their camera at objects and Lens will identify those objects instantly.

"Much like voice, we see vision as a fundamental shift in computing and a multi-year journey," wrote Patel. "We're excited about the progress we're making with Google Lens features that will start rolling out over the next few weeks."

The next stop on the Lens journey will be placing live results over recognized images. For example, eventually, Lens will be able to recognize a concert poster and start playing an associated music video, a capability Apple has given developers via ARKit 1.5.

Though Google did announce a big update to its toolkit later on in the day, ARCore was a no-show during the keynote. Advancements in AR were limited to a preview of AR walking navigation for Google Maps and the Google Lens improvements during the opening presentations, which leaned heavily on AI.

Compared to its peers, it is becoming increasingly clear that Google is more enamored with artificial intelligence, machine learning, and computer vision than it is with AR. However, these technologies could eventually help make augmented reality experiences smarter.

Just updated your iPhone? You'll find new features for Podcasts, News, Books, and TV, as well as important security improvements and fresh wallpapers. Find out what's new and changed on your iPhone with the iOS 17.5 update.

Be the First to Comment

Share Your Thoughts