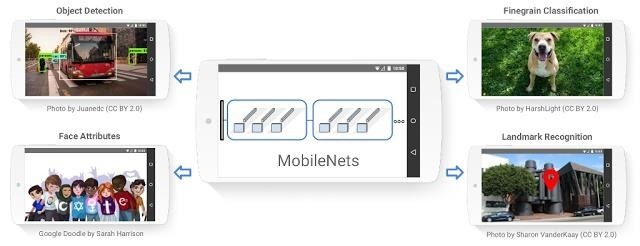

Google might be taking the lead on artificial intelligence in smartphones with their latest announcement, MobileNets. MobileNets is a series of TensorFlow vision models built for mobile devices, described by Google as "mobile-first."

The company hopes that MobileNets will maximize accuracy in visual recognition software without needing massive power. This will be useful for features like Google Photos, that rely on visual recognition to organize — but more importantly, — it will help out the upcoming Google Lens.

Google Lens is an augmented reality/AI feature to be added to Google Photos and Assistant later this year. The feature acts like a search engine, but with your camera. For example, pointing the camera at a restaurant makes reviews and other information pop up for immediate knowledge without having to physically search.

MobileNets promises to make features like the Lens even stronger, with less power consumption. It has the ability to analyze faces, detect objects, classify species, and recognize landmarks. Not only will this improve upon Google's latest innovation, but it could also mean the start of more deep learning on mobile in the mainstream.

The launching of MobileNets comes off of Apple's ARKit announcement, which has sent Google scrambling for better technology in the AI/augmented reality realm. Next Reality's resident expert, Jason Odom, says of the competition:

Last week's reveal of the ARKit from Apple, which essentially does what Google's long time AR Project Tango hardware is designed to do, seems to have people scrambling. While Apple's solution may be a less powerful version, it does not require special hardware to accomplish. That is a big deal. And Google knows it.

In the past, mobile devices haven't been strong enough for AI work due to the restrictions in place with battery life. Instead, they've used cloud services which give users information through the app. This works fine but could mean problems with latency as well as privacy, as cloud services typically make information vulnerable to hacking and cyber attacks.

Since Google has taken care of all the optimization and has pre-trained the models, MobileNets requires minimum battery power while maximizing accuracy. Additionally, developers can easily pick a model for their apps based on their own requirements.

With this release, Google has also made sure that MobileNets is accessible to everyone based on space requirements and acceptable latency. MobileNets' capabilities show just how serious Google is about perfecting AI and augmented reality in smartphones.

Just updated your iPhone? You'll find new features for Podcasts, News, Books, and TV, as well as important security improvements and fresh wallpapers. Find out what's new and changed on your iPhone with the iOS 17.5 update.

Be the First to Comment

Share Your Thoughts