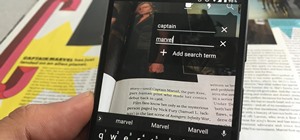

After the mobile augmented reality platforms of ARKit and ARCore moved Google's previously groundbreaking Project Tango (the AR platform that gave us the first smartphones with depth sensors) into obsolescence in 2018, we've seen a bit of a resurgence of what was then a niche component for flagship devices.

Samsung revived the time-of-flight sensor with its Galaxy Note 10 and Galaxy S10 5G, though it has ditched the sensor in its current generation models. Radar made a brief cameo via Project Soli in Google Pixel 4. More recently, Apple implemented LiDAR sensors in the iPhone 12 Pro and iPad Pro lineups after breaking through with the TrueDepth front-facing camera that ushered in the era of The Notch.

Now, Google's AI research team has made a set of tools available for developers to take advantage of the 3D data those sensors generate.

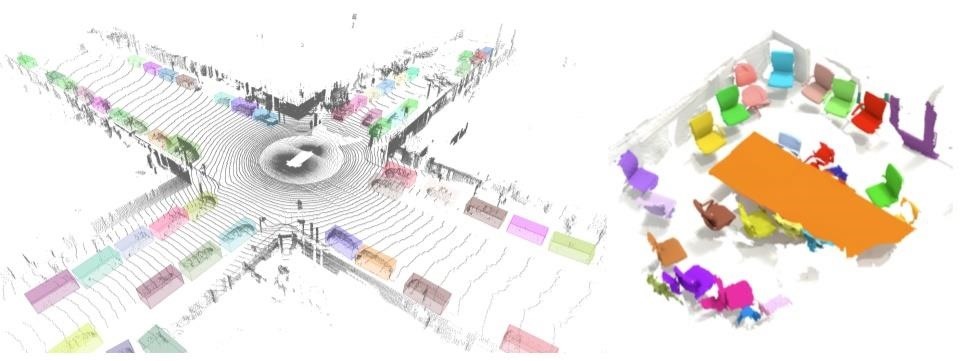

This week, Google added TensorFlow 3D (TF 3D), a library of 3D depth learning models, including 3D semantic segmentation, 3D object detection, and 3D instance segmentation, to the TensorFlow repository for use in autonomous cars and robots, as well as for mobile AR experiences for devices with 3D depth understanding.

"The field of computer vision has recently begun making good progress in 3D scene understanding, including models for mobile 3D object detection, transparent object detection, and more, but entry to the field can be challenging due to the limited availability tools and resources that can be applied to 3D data," said Alireza Fathi (a research scientist) and AI Rui Huang (an AI resident of Google Research) in an official blog post. "TF 3D provides a set of popular operations, loss functions, data processing tools, models, and metrics that enables the broader research community to develop, train and deploy state-of-the-art 3D scene understanding models."

The 3D Semantic Segmentation model enables apps to differentiate between foreground object or objects and the background of scene, as with the virtual backgrounds on Zoom. Google has implemented similar technology with virtual video backgrounds for YouTube.

By contrast, the 3D Instance Segmentation model identifies a group of objects as individual objects, as with Snapchat Lenses that can put virtual masks on more than one person in the camera view.

Finally, the 3D Object Detection model takes instance segmentation a step further by also classifying objects in view. The TF 3D library is available via GitHub.

While these capabilities have been demonstrated with standard smartphone cameras, the availability of depth data from LiDAR and other time-of-flight sensors opens up new possibilities for advanced AR experiences.

Even without the 3D repository, TensorFlow has contributed to some nifty AR experiences. Wannaby leveraged TensorFlow for its nail polish try-on tool, and it also assisted Capital One with a mobile app feature that can identify cars and overlay information about them in AR. In the more weird and wild category, an independent developer used TensorFlow to turn a rolled-up piece of paper into a lightsaber with InstaSaber.

In recent years, Google has harnessed machine learning through TensorFlow for other AR purposes as well. In 2017, the company released its MobileNets repository for image detection a la Google Lens. And TensorFlow is also the technology behind its Augmented Faces API (which also works on iOS) that brings Snapchat-like selfie filters to other mobile apps.

It's also not the first time Google has leveraged depth sensor data for AR experiences. While Depth API for ARCore enables occlusion, the ability for virtual content to appear in front of and behind real-world objects, for mobile apps via standard smartphone cameras, the technology works better with depth sensors.

Machine learning has proven indispensable in creating advanced AR experiences. Based on its focus on AI research alone, Google plays just as crucial a role to the future of AR as Apple, Facebook, Snap, and Microsoft.

Just updated your iPhone? You'll find new features for Podcasts, News, Books, and TV, as well as important security improvements and fresh wallpapers. Find out what's new and changed on your iPhone with the iOS 17.5 update.

Be the First to Comment

Share Your Thoughts